Published

- 2 min read

Task-Incremental Learning on Long Text Sequences

Conference Paper

Details

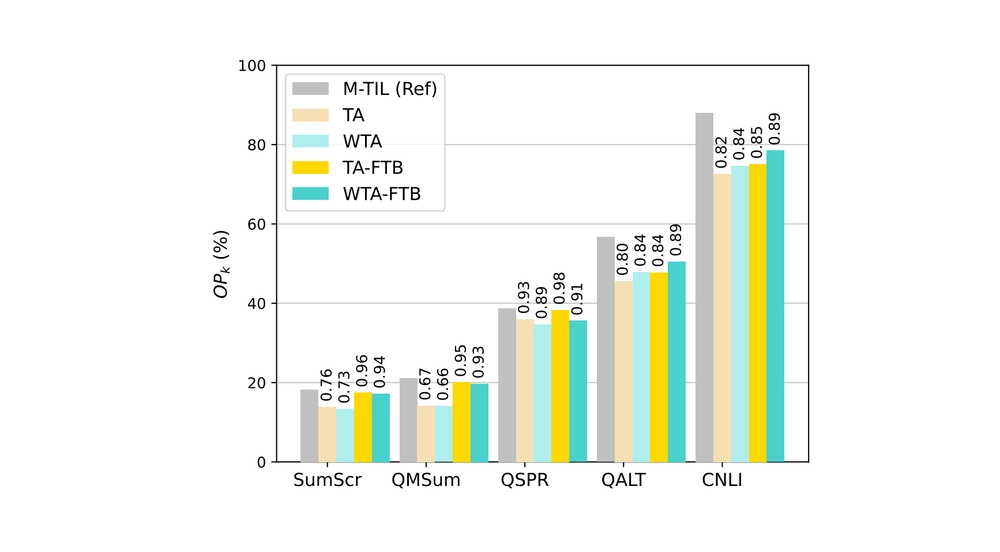

Can we continually learn from a stream of language-oriented tasks using Large Language Models? What is the input sequences are somewhat longer than usual? What is the impact of Task Arithmetic in LoRA-based models?

These are the challenging research questions addressed in this paper!

- Authors: Natalia Graziuso, Andrea Zugarini, Stefano Melacci

- Title: Task-Incremental Learning on Long Text Sequences

- Where: Italian Conference on Computational Linguistics (CLiC-it) 2024

Links

BibTeX

@inproceedings{cmoss,

author = {Natalia Graziuso and Andrea Zugarini and Stefano Melacci},

editor = {Felice Dell'Orletta and Alessandro Lenci and Simonetta Montemagni and Rachele Sprugnoli},

title = {Task-Incremental Learning on Long Text Sequences},

booktitle = {{Proceedings of the Tenth Italian Conference on Computational Linguistics (CLiC-it 2024)}},

series = {CEUR Workshop Proceedings (CEUR-WS.org, ISSN 1613-0073)},

volume = {TBA},

pages = {TBA},

publisher = {CEUR},

year = {2024},

url = {https://clic2024.ilc.cnr.it/wp-content/uploads/2024/12/48_main_long.pdf},

doi = {TBA}

}